AI Memory Infrastructure

The memory layer for AI products — persistent, explainable, automatic.

No vector DBs. No glue code. No prompt hacks. Works with OpenAI, Claude, and Gemini

Your message will become an AI Memory

const response = await fetch("https://mnexium.com/api/v1/responses", {

method: "POST",

headers: {

"x-mnexium-key": "mnx_live_...",

"Content-Type": "application/json"

},

body: JSON.stringify({

model: "gpt-4o-mini",

input: "Give me recipes for my favorite food?",

mnx: {

subject_id: "user_123",

chat_id: "cc2debb4...523894525f77",

log: true,

learn: true,

recall: true,

history: true,

state: { load: true, key: "current_task" }

}

})

});Provider Agnostic

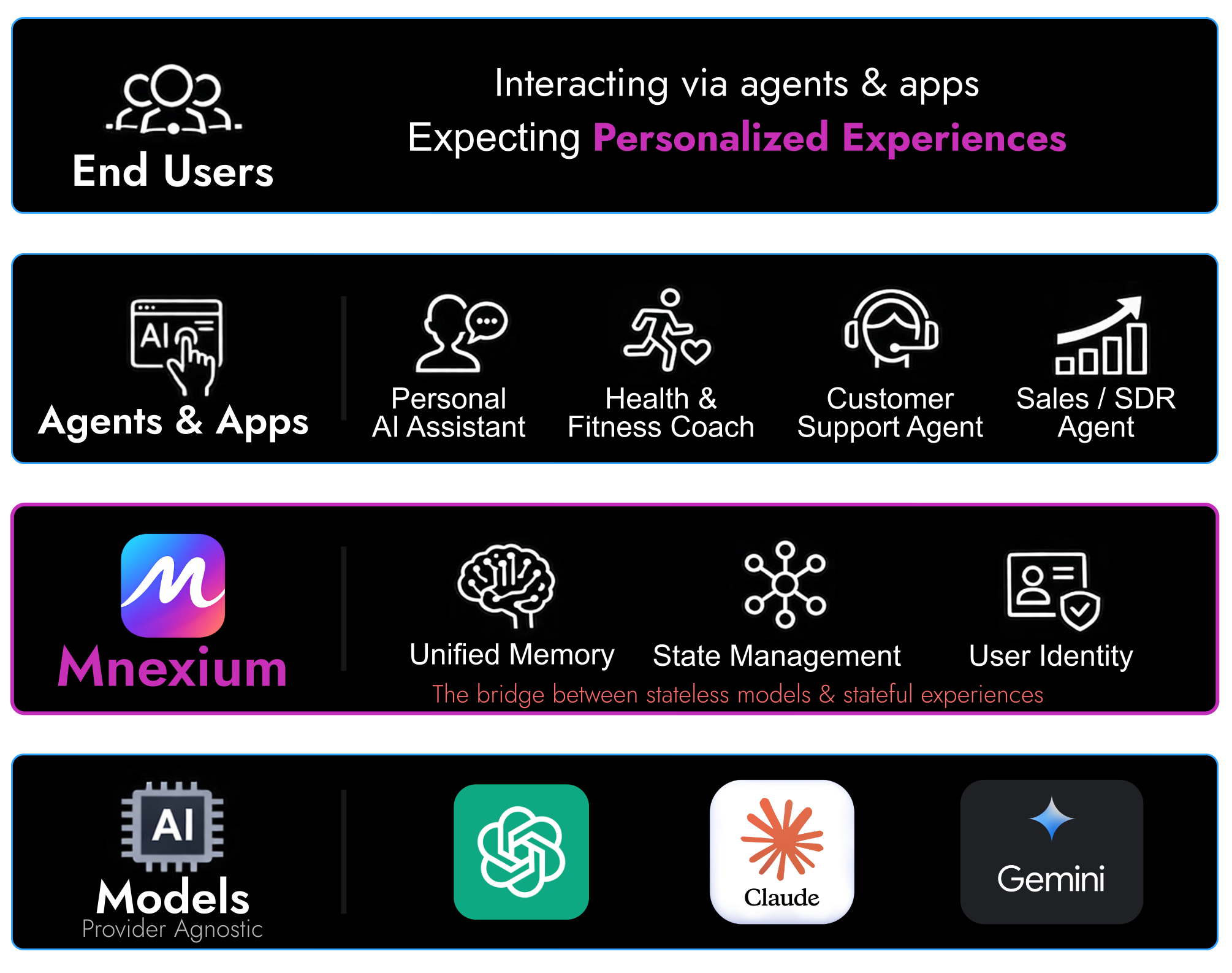

The Bridge Between Models & Experiences

Mnexium sits between your AI models and your users, providing unified memory, state management, and identity. Works with OpenAI, Anthropic Claude, and Google Gemini — switch providers without changing your memory layer.

Want product news and updates? Sign up for our newsletter.

Memory For AI

Users repeat themselves. Agents lose context. Tasks reset. Mnexium gives AI long-term memory — safely, automatically. Four complementary systems for context management so your agents actually learn and improve over time.

Your memory, your control. Every memory stays connected to your application — portable, queryable, and fully owned by you.

- Chat History

The raw conversation log — every message sent and received within a session. Used for context continuity.

- Agent Memory

Extracted facts, preferences, and context about a user. Persists across all conversations and sessions.

- Agent State

Short-term, task-scoped working context for agentic workflows. Tracks task progress and pending actions.

- Observability

Full audit trail of every API call, memory creation, and auth event. See what your agent did and why.

How it works

Memory that persists across sessions

Traditional chatbots forget everything the moment a session ends. Users repeat themselves. Agents lose context. Multi-step tasks reset. Mnexium changes this by giving your AI persistent memory that works automatically.

With just a few lines of code, your agent learns from conversations, stores what matters, and recalls relevant context when users return — even days or weeks later. Every memory is scored, searchable, and explainable.

User says: "I prefer pescatarian meals."

User asks: "What should I cook this week?"

Full observability into what memories were used and why.

Simple integration

Add Memory in Seconds

No SDKs required. Just add the mnx object to your existing OpenAI calls. We handle storage, embeddings, and retrieval automatically.

- Automatic persistence.

- Set

log: trueand every turn is logged with full context. - History injection.

- Enable

history: trueto prepend prior messages automatically. - Smart memorization.

- Let AI decide what's worth remembering with

learn: true.

// Search memories by semantic similarity

const memories = await fetch(

"https://mnexium.com/api/v1/memories/search?" +

"subject_id=user_123&q=favorite+food",

{ headers: { "x-mnexium-key": "mnx_live_..." } }

);

{

"data": [

{

"id": "mem_abc123",

"text": "User loves Italian cuisine",

"kind": "preference",

"importance": 75,

"score": 92.5

}

]

}Use Cases

Built for Real-World AI Apps

From personalized chatbots to multi-step agents, Mnexium handles the memory layer so you can focus on your product.

- Personalized Chatbot

- Build a support assistant that remembers user preferences across sessions. No more "What's your name again?"

- Resumable Agent

- Build a travel planner that tracks multi-step tasks. User leaves mid-booking? Agent picks up exactly where they left off.

- Multi-tenant SaaS

- Map project_id to orgs and subject_id to users. Each workspace gets isolated memory with zero cross-contamination.

- Tool Output Tracking

- Track pending emails, tickets, or payments in Agent State. Let the agent know what's still in flight.

Pricing

Start free, scale as you grow

Generous free tier for developers. Predictable pricing for production workloads.

Beta

Free

Free for beta testers & early customers.

- Up to 1,000 Memory Actions

- Up to 10,000 API calls

- All documented features

- Please contact for more information

Frequently asked questions

- How do I integrate Mnexium with my existing code?

Add an `mnx` object to your API calls. We support both OpenAI's Chat Completions and Responses APIs. No new SDK required — use what you already have and set options to control memory behavior.

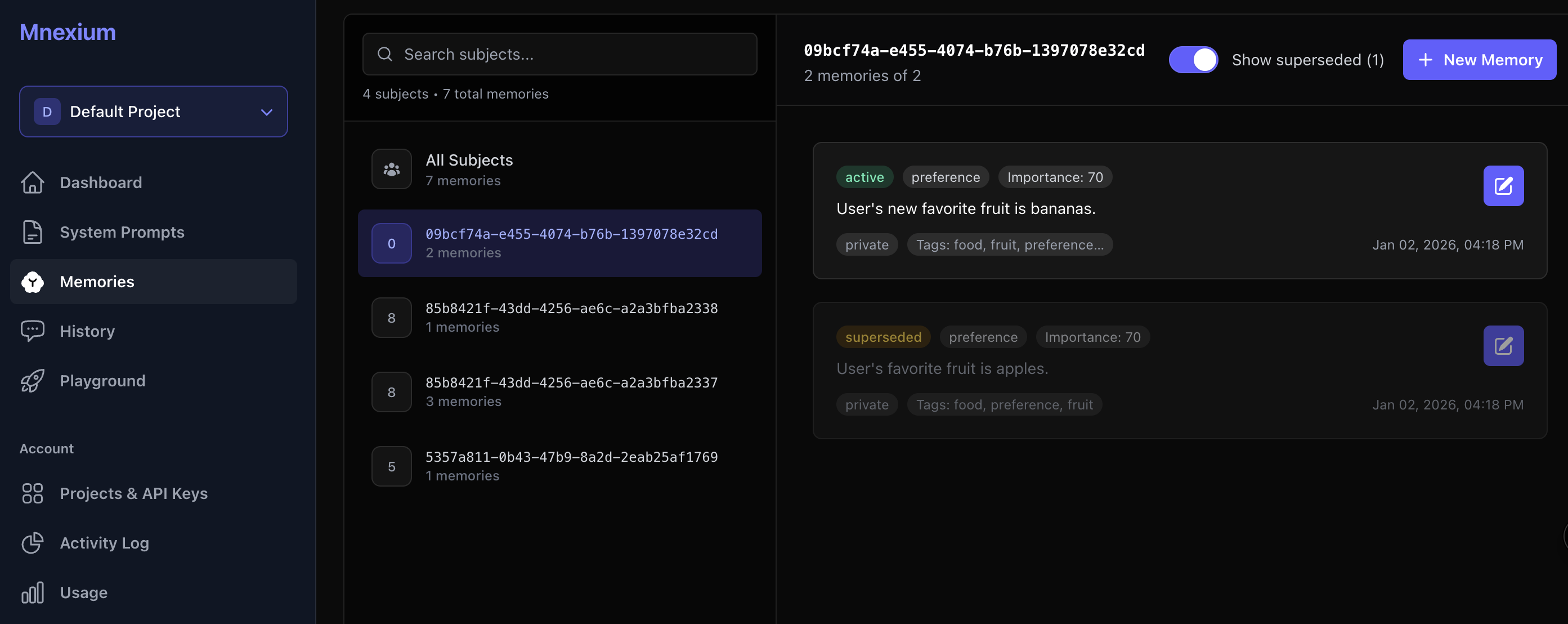

- What is a memory and how does versioning work?

A memory is a structured record. When new memories conflict with existing ones, the old memory is automatically marked as 'superseded' while the new one becomes 'active'. You can visualize the full evolution chain with Memory Graphs.

- What's the difference between Memory, Agent State, and Chat History?

Chat History is the raw conversation log within a session. Agent Memory stores long-term facts about users that persist across all sessions. Agent State is short-term, task-scoped for tracking agent progress and pending actions.

- How is Mnexium different from a vector database?

Mnexium is an opinionated memory layer built for AI agents. We handle automatic learning, semantic deduplication, memory versioning, recall scoring, user profiles, governance, and full observability—not just embeddings.

- Can I use my own API keys?

Yes. Pass your OpenAI key via x-openai-key, Anthropic key via x-anthropic-key, or Google key via x-google-key. We never store your provider keys—they're used only for the duration of the request.

- What models and providers do you support?

We're provider-agnostic. Currently supported: OpenAI ChatGPT, Anthropic Claude, and Google Gemini. Use what you're already used to.

- Is my data secure?

Yes. All data is encrypted at rest and in transit. We support scoped API keys with granular permissions (read/write/delete per resource type), and you can revoke access instantly. Full audit logs track every action.

- What are User Profiles?

Profiles are business defined and AI-generated summaries of a user. They provide a overview of preferences, facts, and context—useful for personalizing responses without loading all individual memories.

Contact Us

Have questions about Mnexium? Want to discuss integrations?

We'd love to hear from you.

Ready to give your AI a memory?

Start building with persistent memory today. Free tier includes everything you need to get started.